Here I show some of the main research I’ve done between 2020 and 2025. It features innovative methods for selecting suitable compute resources for any large-scale data processing workload. This work will conclude with a doctoral degree in late January 2026.

Research Problem

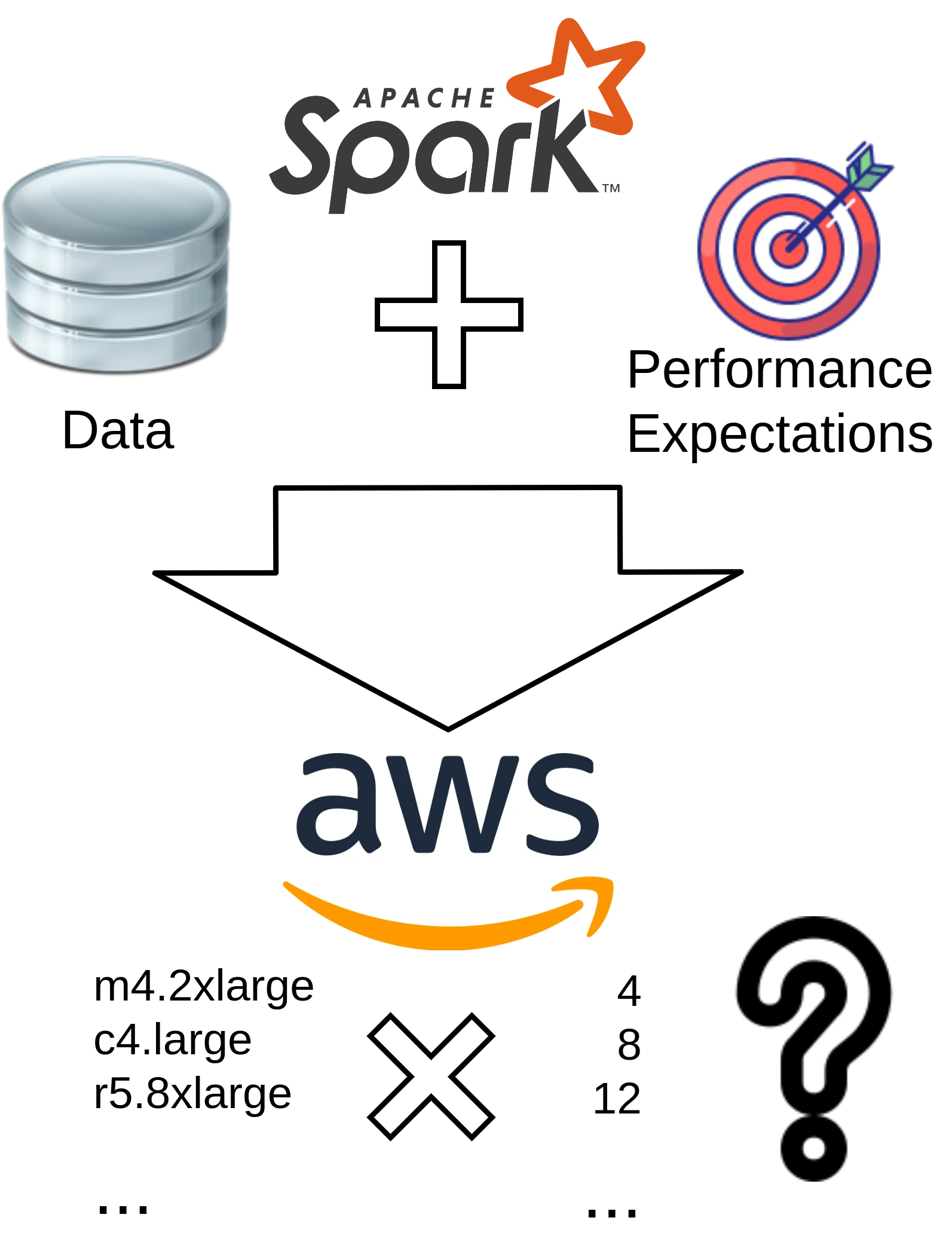

Distributed dataflow systems such as Apache Spark and Apache Flink enable data-parallel processing of large datasets on clusters. Yet, selecting appropriate computational resources for dataflow jobs –– that neither lead to bottlenecks nor to low resource utilization –– is often challenging, even for expert users such as data engineers. An efficient configuration of resources optimizes for low execution cost and low execution duration simultaneously. This is an optimization problem that many researchers around the world are currently working on.

Main Results

The following presents some of my main works tackling the

aforementioned research problem.

Towards Collaborative Optimization of Cluster Configurations for Distributed Dataflow Jobs [PDF]

This paper provides a problem analysis of model-based performance

prediction of dataflow jobs on different types of cloud infrastructures.

It identifies how different aspects of the execution context contribute

and draws conclusions for designing collaborative systems for sharing

performance metrics and performance models for data processing jobs.

C3O: Collaborative Cluster Configuration Optimization for Distributed Data Processing in Public Clouds [PDF]

This work identifies how different aspects of the execution context

contribute and designs a collaborative system for sharing performance

metrics and performance models for data processing jobs. It includes

system specifications and generalized performance models, which are

implementated and evaluated. By considering the broader execution

context, these performance models have shown better results than

performance models from related work.

Training Data Reduction for Performance Models of Data Analytics Jobs in the Cloud [PDF]

This paper discusses the sharing aspect of data, with a particular

focus on increasing resource efficiency when storing and exchanging

potentially large amounts of data.

Get Your Memory Right: The Crispy Resource Allocation Assistant for Large-Scale Data Processing [PDF]

This work focuses on appropriate memory allocation, since this has

shown to typically have the most significant impact on

resource-efficiency. Systems like Spark use memory for caching parts of

the dataset. Meanwhile, a lack of memory leads to more intensive use of

the disk, slowing down the processing. By conducting profiling runs on

reduced hardware, and on small samples of the input dataset, we can

extrapolate memory use of the full execution. The derived resource

configurations have shown high cost-efficiency when compared to related

work.

Flora: Efficient Cloud Resource Selection for Big Data Processing via Job Classification [PDF]

This most recent work uses statistical methods to in combination with

infrastructure profiling to predict resource options’ relative

posititions in the cost–duration space. It exploits the similarity of

resource preferences of jobs with similar memory access patterns. The

derived resource configurations have shown high resource-efficiency.

Further, this method features very low exploration overhead, when

compared to related work. Keeping this resource selection overhead low

is a central theme in my doctoral thesis, which will soon be published

here.

A complete list of more than 20 papers I co-authored during my time in research is available on my Google Scholar profile.