This was a joint industry/research project called OPTIMA with the goal of automating parts of the municipal wastewater management system. Here, I contributed a scalable data stream processing platform to enable communication between the various system components.

Project Description

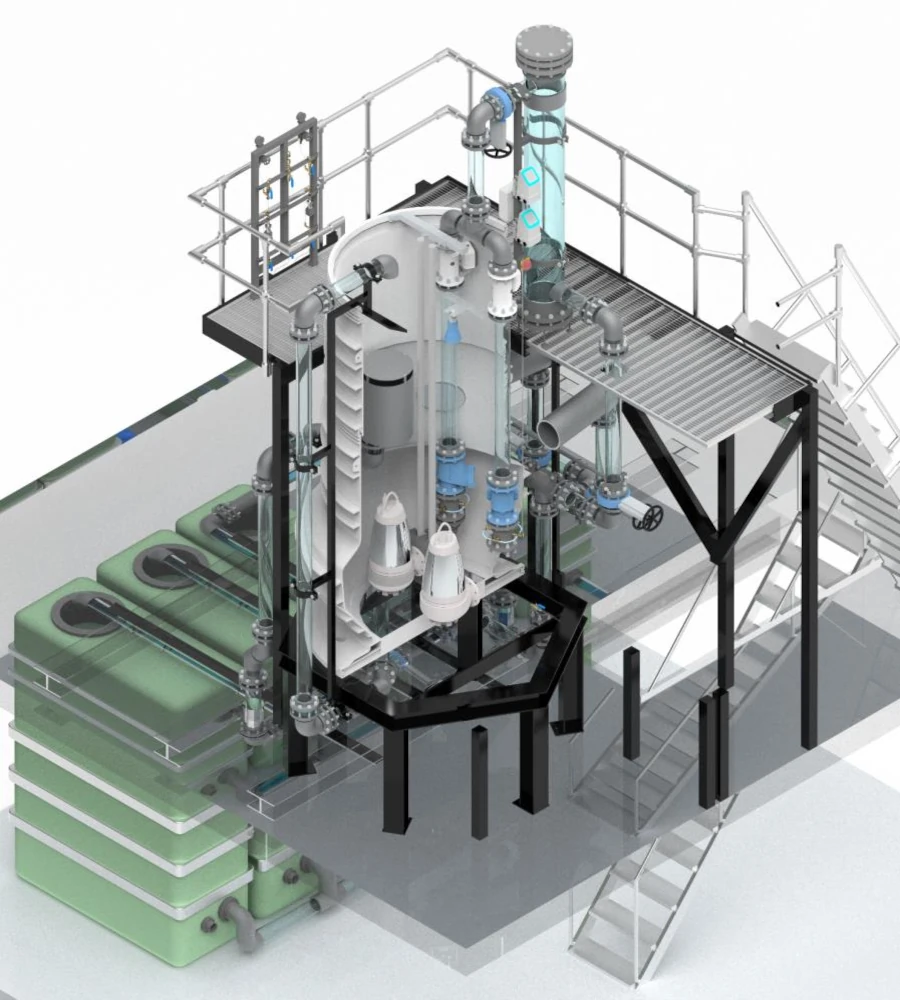

Combined sewer overflows (CSO) are an environmental problem. They can occur when heavy rainfall floods sewers, causing the excess contents to spill into city waterways and threaten wildlife. This is a problem for many cities with sewers dating back to the 19th century and earlier, as they do not have separate sewers for wastewater and stormwater, and retrofitting is usually impractical. Berlin is an example of such a city.

When the project began, CSOs were avoided by preemptively pumping wastewater into storage basins to reserve capacity for possible heavy rain events. However, this comes at a significant energy cost.

In this project, we collaborated to develop a system for predictive wastewater pumping based on expected rainfall to avoid CSOs while saving energy compared to the original approach.

| Stakeholder | Role |

|---|---|

| TU Berlin: Distributed and Operating Systems (DOS) | Partner (my group) |

| TU Berlin: Fluidsystemdynamik (FSD) | Partner |

| Ingenieurbüro Sieker | Partner |

| Fraunhofer FOKUS | Partner |

| Investitionsbank Berlin (IBB) | Funding, monitoring |

| Investitionsbank Brandenburg (ILB) | Funding, monitoring |

| Berliner Wasserbetriebe (BWB) | Prospective client |

Personal Contributions

As part of a team within the DOS research group at the TU Berlin, I contributed the following:

- Assisted project partners in developing the software for their part of the overall system

- Developed and deployed a Kafka-based distributed stream processing

platform for live analysis of critical urban infrastructure data

- Development of an Apache Flink job to combine load predictions and ensure proper formatting, value plausibility, and dynamic decision of which load prediction to send to water pump controllers

- Deployment in a fault-tolerant and scalable manner using Kubernetes and Helm

- Testing scale-out behavior and automatic failure recovery

| Technology Used | Purpose |

|---|---|

| Flink | Distributed data stream processing framework for processing the load prediction data |

| Kafka | Distributed message queue for publish/subscribe-based communication between the system components |

| ZooKeeper | Coordination of Kafka replicas |

| Cassandra | Distributed database for archival of load predictions and sensor data |

| HDFS | Provides backups for the Flink job’s failure recovery mechanism |

| Prometheus | Capturing system operations metrics |

| Grafana | Visualizing caputured system operations metrics |

| Docker | Containerizing components of the data stream processing platform |

| Kubernetes | Orchestrating the containers in a scalable and fault-tolerant cluster |

| Helm | Automated configuration and deployment of the Kubernetes cluster |